Zillow Data Time Series Analysis

Zillow Data Time Series Analysis

In this bog post we will deal with the time series analysis of of the Zillow data set which details the average house prices in every zipcode between April 1996 to April 2018. Our analysis can be found in this github repo.Of course not all zipcodes have data starting from April 1996. There are overall 14723 Zipcodes which is a lot of data. The question that we address in this project is the following. Imagine a real estate company has contracted us to analyze the data. The question that they want answered is

What are the 5 best zipcodes to invest in?

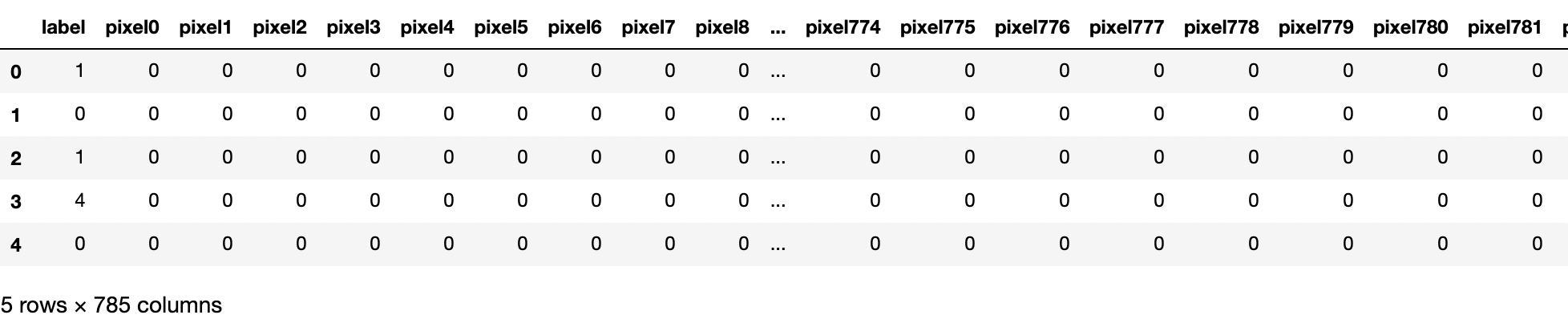

Of course the important question in what does one mean by best. That is of course why we are being paid the big bucks. We will have several definitions on best and analyze the data accordingly. Let us begin by describing the data set and gain some intuition from it. The dataset looks like the following, when stored in the 'wide' format with one column for the average price for each month

Let us first begin with some visualizations. Of course it is not reasonably possible to plot 14723 different time-series, nor would it be enlightening. Below we plot the first 200 time series in our data frame and also plot the average prices through all the zipcodes throughout our timeline where we have normalized by dividing the prices at later times by the price at the first non-Null timestamp.

As is obvious from the graphs of individual price ratios and that of the average price, there are three distinct sections of the data. The first 10 years or so show unmitigated growth, the next 6 years reflect the infamous sub-prime mortgage housing crisis and the next 6 years or so again reflect a growth trend. Of course not every zipcode is going to reflect the same trend but for starters we can keep this division in mind to actually perform the analysis.

Naive "best" definition

One naive definition of the best zipcodes to invest in can simply be the return on investment "ROI" they generate every month. Assuming the monthly ROI is x we can write

x = (1/n)*Log(final_price/initial_price) where n is the number of months. Since not all the zipcodes have data beginning on a given date, n will change depending on the zipcode we are talking about. We will have two starting dates of this analysis, one will be April 1996 which is the start of the data and the other will be February 2012 which is the end of the housing crisis looking at average prices. Plotted are the results below. As we can see the top 5 predictions have 4 of 5 entries in common. However that is only because those zipcodes only start having data after 2012 and hence the results may not be trustworthy.

However this naive analysis may not be trustworthy since it assumes that the "ROI" will continue on its present trend. It aslo does not have any measure risk associated with it. Do to a better job we will perform a SARIMA analysis on our samples.

ARIMA Analysis

The data we have for the individual cities is not stationary at all. In order to perform an ARIMA analysis we first need to make our data stationary. Also as noted above we will only analyze data from the last Jan 2013 to April 2014 i.e. safely in the post-recession region. We will also restrict ourselves to the zipcodes with SizeRanks < 1000 since processing the entire dataset is extremely time consuming. You can think of it as investment in the "big markets"

Lets begin by performing a (S)ARIMA analysis on our test data. We will use the python function auto_arima to figure out the best ARIMA model for our data. In order to make our data stationary we will try looking at the percent monthly returns. Since we are assuming that this data series is more or less stationary or at least weakly stationary our 'I' component will be (0,1). Our p and q component range from (0,3) for ARIMA and (0,2) for the seasonal component with D=0. The results of performing an ARIMA analysis on the average data set are shown below. As we can see it is a reasonable fit although the residuals are not completely drawn from a "normal". The SARIMA model (1,0,0)X(0,0,0,12) gives the best fit using the AIC criterion. Using the mean on the predicted model we get that the compounded ROI return over 2 years is 12%. We will use this as our baseline for a "risk free investment".

The rolling mean for percent monthly returns of first 100 data points are shown below. As we can see visually the data are more or less stationary or weakly stationary. We will perform the same analysis on the first 1000 data points that we performed for our average prices data, i.e. we will fit the last 6 years of data to an ARIMA model using auto_arima and then make predictions using SARIMA. We calculate the compounded returns for two years. We get a 4 zipcodes with extreme values of returns. Dropping these zipcodes since clearly an ARIMA model is not a good fit for them we impose the condition that the compunded mean returns should be less than 1.5 and positive. We then use two different criterion to report the top 5 zipcodes.

Largest mean returns

The simplest thing to do is to arrange the compounded mean returns should be the largest. This gives us the following results

Sharpe ratio

Sharpe ratio is a concept from used in trading stocks and is defined as

(return - risk_free_return)/(std). For risk free return we use the returns of the average dataset and for the standard deviation we use the spread in the predictions predicted by our ARIMA modelling. Using sharpe ratio as a metric we get the top 5 zipcodes to invest in are

(return - risk_free_return)/(std). For risk free return we use the returns of the average dataset and for the standard deviation we use the spread in the predictions predicted by our ARIMA modelling. Using sharpe ratio as a metric we get the top 5 zipcodes to invest in are

Consistency of the analysis

Let us now repeat the analysis for the last 5 years of data instead of 6 and see if we get reasonably similar results. Below we have the results for the top 5 zipcodes assuming the sharpe ratio as our metric and considering only last 5 years of data.

As we can see doing the SARIMA analysis on the 5 years data we can see there in only one entry common in the top 5 zipcodes to the 6 year analysis. However looking at the fraction of common entries we can see that the fraction of common zipcodes quickly gors to 50% within the first ~ 40 zipcodes

Since quite a few of the zipcodes are common between the two timescales we have some confidence in the stability of our model and we can choose from either models.

As we can see doing the SARIMA analysis on the 5 years data we can see there in only one entry common in the top 5 zipcodes to the 6 year analysis. However looking at the fraction of common entries we can see that the fraction of common zipcodes quickly gors to 50% within the first ~ 40 zipcodes

Since quite a few of the zipcodes are common between the two timescales we have some confidence in the stability of our model and we can choose from either models.

Future Work

Since the dataset is very big a detailed ARIMA analysis will take ~ 70 hrs to run. However there are a ways to speed it up. Since we now know the likely ARIMA solutions for 1000 data points we can constraint our fits to those solutions. Our predictions about "ROI" are worthless if there is another housing crisis in the offing. While the indications regarding that won't necessarily show up in the in the housing data we can look for indicators such as the rate of growth of average housing prices and how similar the current ARIMA analysis looks like the one right before crash. Assuming a crash will happen we need to protect our portfolio by choosing zipcodes whose downturns aren't as severe. We can do that by looking at data from the previous housing crisis.We should also keep in mind that our ARIMA solutions were often only marginally better than the best contendors. A true analysis would consider the weighted average of other ARIMA models to get predictions. We will leave this for future work.

Comments

Post a Comment