Bike Sharing Demand Prediction

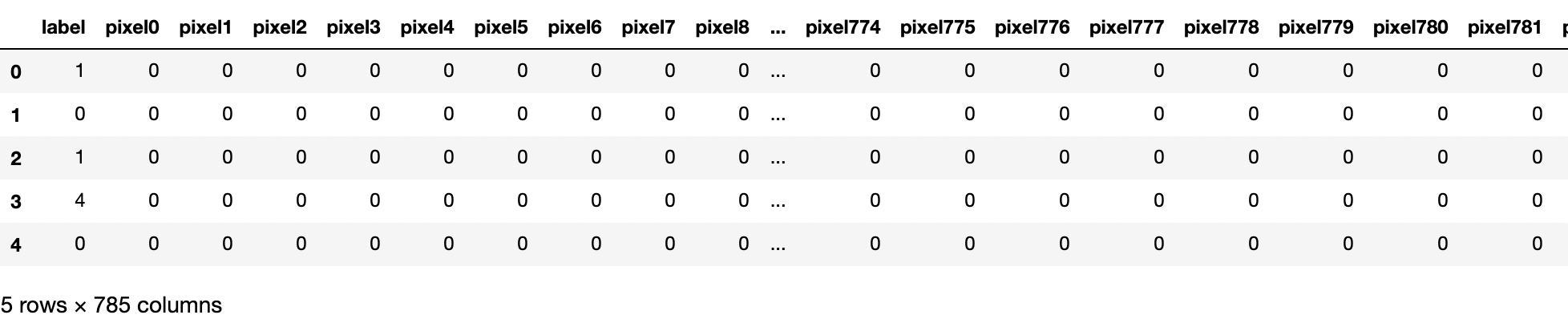

Regression to Predict Bike Sharing Demand Introduction In this blogpost we will use hourly data on bike sharing program to predict the subsequent demand. the dataset can be found here on Kaggle. The data has been collected by a bike share progarm in the city of Washington D.C. The dataset contains training data till the 20th of every month for 2 years (2011-2012). Our task is to predict the usage over the remaining days in the month for every hour. Our analysis can be found here. The dataframe looks as follows There are no null values and no rows need to be removed or values imputed. Feature Engineering and Data visualization The only feature engineering we perform is to transform the the datetime stamp to [time of day (hour), day of week, month, year ]. The transformed dataframe looks as follows Note that in the training data there are three target variables, registered users, casual users and the sum of the two (count) total users. For the final submission to Kaggle we on...