King County Housing - Beginner Analysis

King County Dataset - Beginner Analysis

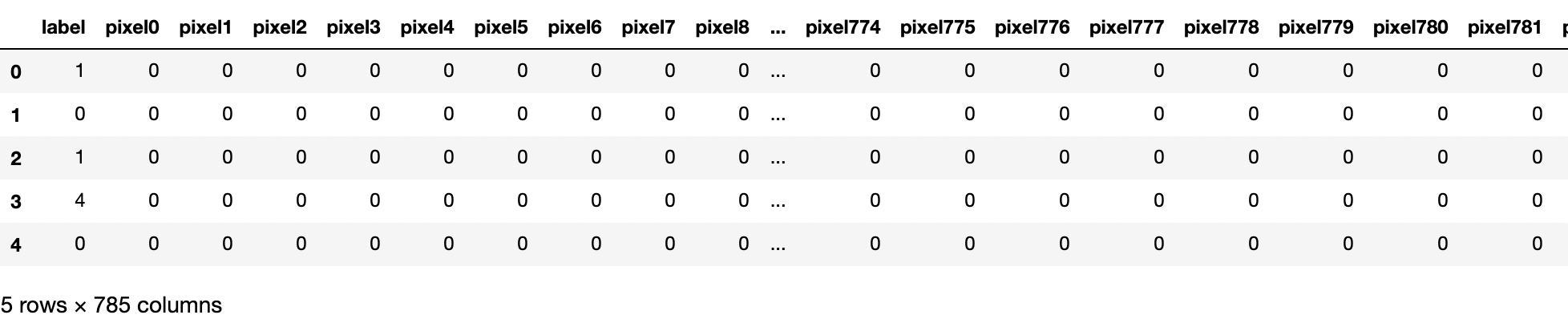

This blog post is for the analysis of king county housing data set that can be found here which describes the housing prices in King County between May 2014- May 2015. The data set I use is slightly modified and needs a little more preprocessing and cleaning. This analysis can ge found in this github repository. Lets begin by taking a look at the contents of the data set.- id - unique identified for a house

- dateDate - house was sold

- pricePrice - is prediction target

- bedroomsNumber - of Bedrooms/House

- bathroomsNumber - of bathrooms/bedrooms

- sqft_livingsquare - footage of the home

- sqft_lotsquare - footage of the lot

- floorsTotal - floors (levels) in house

- waterfront - House which has a view to a waterfront

- view - Has been viewed

- condition - How good the condition is ( Overall )

- grade - overall grade given to the housing unit, based on King County grading system

- sqft_above - square footage of house apart from basement

- sqft_basement - square footage of the basement

- yr_built - Built Year

- yr_renovated - Year when house was renovated

- zipcode - zip

- lat - Latitude coordinate

- long - Longitude coordinate

- sqft_living15 - The square footage of interior housing living space for the nearest 15 neighbors

- sqft_lot15 - The square footage of the land lots of the nearest 15 neighbors

The first order of business is to understand the data and preprocess it to get it into a format where we can begin our analysis. In order to do that we will go through the following steps

We want to figure out if there is a "best" time to sell your house in term of its price. Of course every house is different, and if we classify houses on every feature available then there wont be enough data. Hence we choose to group houses based on zipcode and grade, two features the owner is likely to know. Given zipcode and grade, we group the data according to those features and predict the best time to sell the house. The output of our function looks like following

3) Can we the give the buyer of the house a list of houses for his needs?

Typically buyers want to know what the expected price of a house would be given their criterion such as location, sqft, bedrooms, bathrooms, zipcode etc. In the code we have built just such a function that will print out the zipcode and the average price based on your selection. The function also returns a dataframe containing the pertaining information sorted by house prices. See code for details. Upgrade: With live data we can use this function to create visualizations of locations and attributes of the house and plot them on a map. Combining it with an advanced version of what we did in Q2 we can recommend the buyer to buy the house or wait with associated confidence levels.

- Drop useless columns - Columns like id are a randomly assigned number and not useful for analysis so we drop it.

- Parse the MM/DD/YYYY date into separate columns of Month, Date and Year that we can use for analysis. Then drop the date column

- Convert strings to floats, replace missing values as appropriate. Note: This is where some attention is needed to know how to replace missing value according to each column. See the github code for further clarity. Upgrade: Find a better way to fill missing values like KNN.

- Get rid of outliers for doing regression. We do this visually doing box-whisker plots as shown below. See the code for the choices of cuts used. Upgrade: Can make this automated and more scientific

- Create additional features. Upgrade: Create polynomial features.

|

| Box-Whisker Plots for the "continuous" features to remove outliers. Choices to place cuts for outliers are subjective. |

We address three different questions in this analysis.

1) How well can we predict the price of the house using linear models?

2) Can we make recommendations to the seller of the house regarding when to sell it?

3) Can we the give the buyer of the house a list of houses for his needs?

1) How well can we predict the prices of the houses?

The metric we use to see how well we are doing is the log_rmse i.e. the rmse of the error in predictions of the logarithm of prices. We take the log of the prices as our target variable for various purposes. First it makes the price distribution more normal which is an assumption of any linear regression model. Second it removes the need for polynomial feature engineering if the target is dominated by a polynomial of a single power n, i.e. log(y) = n*log(x). Third, it treats expensive houses on an equal footing as the cheaper houses in our regression analysis.

In order to benchmark our models we start with a naive benchmark which predicts prices just based on zipcode and the living sqft. area of the house (see code). This gives us a log_rmse error of 0.249. The residuals for our benchmark are given below. As we can see the residuals are not independent of target price, so a better job can be done. Any linear regression model we use has to beat that. Throughout this document we will use RidgeCV from sklearn for our analysis.

|

| Residuals for our benchmark model |

Building regression models is an iterative process. We check if something works. If it does we keep it and try the next thing until we get an answer that seems to be converging. The first thing we try to beat our benchmark is take our "continuous" variables, and see if they are skewed and then log transform the skewed variables to make them more normal. This improves our fits by a lot but still doesn't beat the benchmark. We get a log_rmse of 0.32

|

| Improvement in log_rmse as we keep adding features from most correlated with price to least |

|

| Residuals with log transforming "continuous variables" |

The reason the model is not performing as well as the benchmark is that the most important feature, the zipcode, which controls a big chunk of any real estate value has not been taken into account. Now since zipcode is a 5 digit number with no monotonic relationship with the target variable of price, it is a categorical variable. We have two options to deal with a categorical variable, either turn it into a "continuous" variable or we can use the pandas get_dummies function to do one hot encoding on zipcode. We first change zipcode to a "continuous" variable and relabel zipcodes as continuous variable such that zipcode vs price is a linear fit. We get an log_rmse of 0.218

Instead of using zipcode as a continuous variable we can do one hot encoding by pandas get_dummies to create more features. Doing this we get a better log_rmse of 0.193. Since one hot encoding is better we keep that

In our final model we add the remaining categorical features including the ones we engineered. We then fit a ridge regression model to get a final score of 0.176. The improvement of our score with each feature is plotted below. We have also plotted our final residuals.

In our final model we add the remaining categorical features including the ones we engineered. We then fit a ridge regression model to get a final score of 0.176. The improvement of our score with each feature is plotted below. We have also plotted our final residuals.

|

| Score improvement as we add more features from most correlated to least correlated |

Conclusions for our Final model.

- Final score of 0.176 is much better than our benchmark naive predictions

- Get dummies worked better than converting categorical variables to "linear". However room for improvement there

- The residuals look better but still a dependence on target variable. Room for improvement

- Need better feature engineering. Also try alternatives to ridge regression

2) Can we make recommendations to the seller of the house regarding when to sell it?

We want to figure out if there is a "best" time to sell your house in term of its price. Of course every house is different, and if we classify houses on every feature available then there wont be enough data. Hence we choose to group houses based on zipcode and grade, two features the owner is likely to know. Given zipcode and grade, we group the data according to those features and predict the best time to sell the house. The output of our function looks like following

If there is enough information available then the function will make recommendations on grade and zipcode. If not it will recommend on zipcode alone. The error bars just correspond to the std devations in data in each group. They are only meant to guide the eye. There is no reason the errors are distributed normally. Upgarde: A useful improvement we can do is to do hypothesis testing and output the probability that the price of the house will go up or down if sold now vs later like they do for flight prices. However I have a feeling that the data set is too small to get enough confidence.

We can query each zipcode and grade we have and we get that the best aggregated time to sell a house is on average April while the worst is January. See code for further details.

3) Can we the give the buyer of the house a list of houses for his needs?

Typically buyers want to know what the expected price of a house would be given their criterion such as location, sqft, bedrooms, bathrooms, zipcode etc. In the code we have built just such a function that will print out the zipcode and the average price based on your selection. The function also returns a dataframe containing the pertaining information sorted by house prices. See code for details. Upgrade: With live data we can use this function to create visualizations of locations and attributes of the house and plot them on a map. Combining it with an advanced version of what we did in Q2 we can recommend the buyer to buy the house or wait with associated confidence levels.

Comments

Post a Comment